In the long and tortuous history of Google’s Privacy Sandbox the issue of Trusted Execution Environments has been a bone of contention. But what is a TEE and why does Google insist that we need them?

Sandbox and TEEs

As originally envisaged, the targeting aspects of the Sandbox were to be carried out on user’s devices using the Protected Audiences API (PAAPI). This was supposed to protect user privacy whilst supporting some level of ad targeting. However, it was quickly recognised that devolving this processing to a device level would introduce an unacceptable degree of latency into a real time process where hundredths of a second are vital. In response, Google proposed that those looking to use Privacy Sandbox could run the PAAPI off-device but, in order to maintain the claimed privacy benefits, it would have to be run in something called a Trusted Execution Environment (TEE).

A TEE is essentially a secure and auditable computing environment. Provided by a cloud hosting business, a TEE hosts a piece of code (in this case the PAAPI) alongside a mechanism that attests to the integrity of that code. This validates that the code being run is as described by the operating business, supposedly ensuring that no funny business is going on behind the scenes.

On the surface, this seems like a laudable aim. By insisting that PAAPI is run on a TEE, Google argues, users can be sure that any data about them that is being used in a way that is consistent with Google’s privacy claims. This may be true, but it is equally true that one can open a walnut with a sledgehammer. A TEE may achieve the end goal required, but it is a massively disproportionate solution to a relatively simple problem.

TEE challenges

The challenges of TEEs are three-fold.

In the first instance, there are an extremely limited number of cloud hosting providers that can offer a TEE to the specification demanded by Google. Based on analysis by the Movement for an Open Web, currently only Amazon Web Services (AWS), Microsoft and – surprise surprise – Google themselves can currently support a PAAPI TEE.

This has clear competitive implications. Any publisher, marketer or AdTech firm looking to use Privacy Sandbox could be in the market for a TEE of this type and it seems that the platforms have got this space sewn up. Having a technology standard baked into Privacy Sandbox that seems to reinforce the market dominance of the hyperscalers does not seem to be a pro-competition solution.

The second challenge is cost. According to our research, TEEs can be up three times more expensive than a non-TEE environment simply for hosting. When you add on top the additional complexity of an attestation provider, and the overhead of implementing and managing a TEE, its clear that Google’s proposed specification has the side effect of imposing significant costs on its competitors. Given that Sandbox has already come under massive antitrust scrutiny from the UK Competition and Markets Authority this is not, as they say, a good look.

Thirdly, there is an asymmetry in the insistence of TEEs. The W3C, supported and encouraged by browser owners such as Google and Microsoft, are requiring businesses to use TEEs but those same browser owners are not showing any willingness to operate their business critical services in the same way. This imposes additional cost and complexity on the broader industry whilst allowing the platforms to operate with impunity. This is nothing less than collusion by the browser owners facilitated by the W3C, supposedly an independent standards body. TEEs should be ruled out of scope by the W3C to prevent this cartel-like behaviour.

Contracts

The fact is that there is a far simpler and more established solution. Contract law has, for hundreds of years, underpinned agreements between businesses and offers a platform whereby agreements can be codified and, if necessary, enforced. A simple contractual agreement between Google and Privacy Sandbox users that it would be implemented in a compliant way would provide sufficient safeguards without the need for a complex and costly technology to be imposed. For those seeking additional TEE-level assurances and associated costs then TEEs would be an OPTION, but not MANDATED. There is clearly a risk of bad actors breaching these contractual terms but that is what contract law is designed to protect.

The mandatory use of TEEs is a disproportionate response to the problem in hand. TEEs were originally conceived for markets such as banking and defence where the absolute security of highly sensitive data and processes are paramount. For a situation where we’re dealing with anonymised user data about web surfing they seem somewhat over-specified!

Moreover, there is a clear imbalance in these proposals. Web browser vendors requiring others to operate TEE, whilst not doing so themselves for important business functionality, exposes the hypocrisy of W3C standards. Yet again there is one rule for the web browser and another for everyone else. All animals are equal but some animals are more equal than others.

We have no objection to the use of TEEs in Privacy Sandbox. If a business feels that they would gain a competitive advantage then they should have the choice to use Privacy Sandbox in this way. However, for others who are comfortable that a contractual agreement provides sufficient protection then this costly and complex technology should not be imposed.

Appendix

Cost of TEEs vs standard implementation (December 2023)

| Cloud Provider | Instance Price (1-year price) | Instance Price with TEE (1-year price) | 1M KMS requests |

| AWS | $2002.68 | $2002.68 | $3 |

| GCP | $1479.24 | $2249.16 | $3 |

| Azure | $1326 | $5382 | $3 |

| Hetzner | $673.08 | N/A | N/A |

| DigitalOcean | $3624 | N/A | N/A |

| OVH | $1164.96 | N/A | $0 |

PAAPI Key/Value Service Design

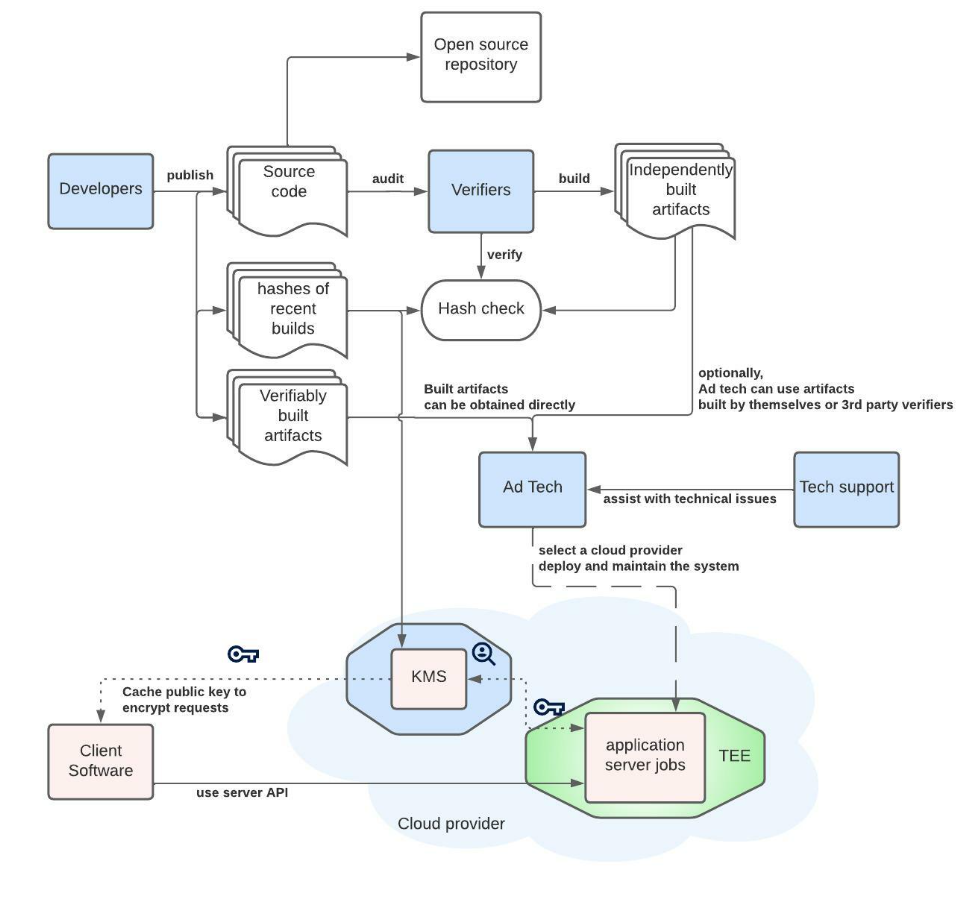

As can be seen in the diagram[24]:

- TEEs must run a verified software image, otherwise KMS must not give up the keys to it.

- The cloud provider must attest that TEE runs an unmodified and un-tamperable verified image.

- The client has to trust the cloud provider’s KMS service, and receive the public encryption keys from it.

- The client must encrypt K/V lookup requests with previously mentioned keys.

- The software inside the TEE (and only it) can decrypt and process the request.

Therefore, if the cloud provider has passed the certification (which is a requirement for client devices to trust its services), and the security of its solutions can be reasonably guaranteed, the client data is secure (although the threats[25] identified by the Protected Audience API designers have to be kept in mind).